From the very first digital camera invented in 1975 that was the size of a printer to the compact cameras in today’s mobile phones, embedded vision technology has evolved leaps and bounds. Once smartphones became popular, cameras began to be used to capture images for tasks like barcode reading, facial recognition, behavioral analysis, quality inspection, etc.

Later, with advancements in processing technology and the application of artificial intelligence in image and video analysis, embedded vision systems gained more prominence in the last one to two decades. Furthermore, another evolutionary aspect has been the need for more than one camera to build new-age embedded vision applications.

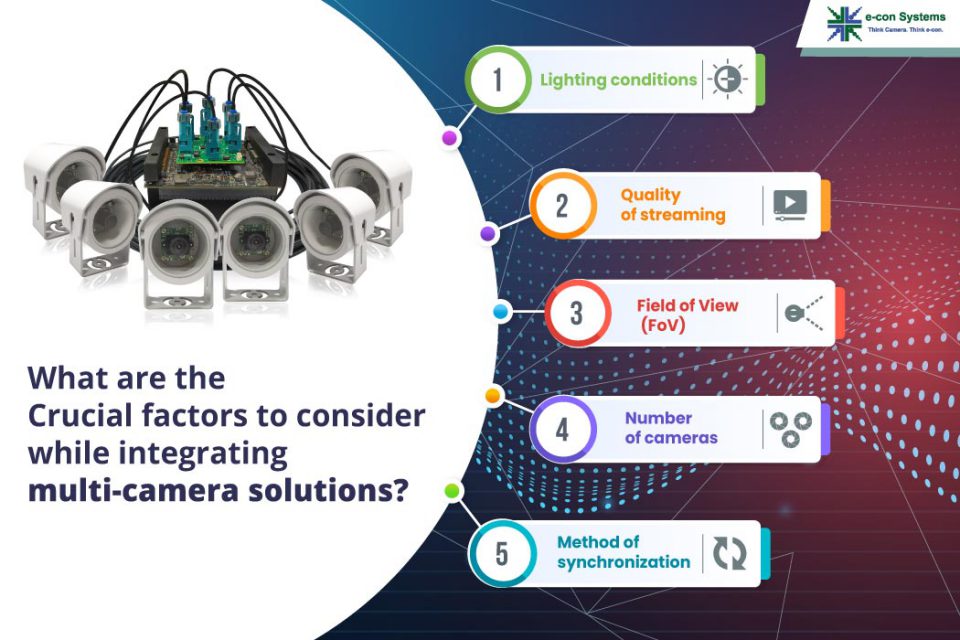

In this article, let’s go deep into the world of multi-camera solutions, some of their application use cases, and, most importantly – the key factors involved in integrating multi-camera solutions.

What are multi-camera solutions – and where are they used?

A multi-camera solution refers to a camera setup/configuration that involves more than a single camera. The required number of cameras depends on the nature of the application – and together, they can either perform a single task or multiple ones. For instance, airport kiosks use multi-camera solutions in which the first camera can be used for iris recognition, the second camera performs facial recognition tasks, and the third camera can enable document scanning and verification capabilities.

Or let’s take a delivery robot that delivers packages without human supervision. Multiple cameras have to operate synchronously in this application to provide a 360-degree view. As you can imagine, the synchronization must be accurate as the robot will use the surround view data to take navigational decisions in real-time.

Other examples of applications that need multi-camera solutions include automated sports broadcasting systems, surround view systems, and autonomous tractors, just to name a few.

Read: Integrating a synchronized multi-camera system into automated sports broadcasting systems

The journey to choosing the right multi-camera solution begins with evaluating camera parameters like resolution, frame rate, sensitivity, dynamic range, and any other feature your application demands. Also, selecting a multi-camera requires analyzing your end application in detail. Since there is no universal approach, the choice of the number of cameras, the method of synchronization, the interface, or the platform is entirely dependent on the specifics of your application. Now, let’s dive into the major factors to consider while integrating multi-camera solutions.

Get to know how e-con Systems helped a global amateur sports broadcaster automate soccer broadcasting and improve the viewing experience.

Factors to consider while integrating multi-camera solutions

Number of cameras

The number of cameras depends on many factors like imaging accuracy, sharpness of details, the position of target objects, the Field of View (FoV), and the processing power of the host platform. A higher number of cameras can offer a higher resolution, prevent lens distortion, and enable a larger field of view.

Method of synchronization

Simply put, you must decide upon your synchronization approach. Software synchronization is preferred only when your application needs to capture static objects in controlled environments where frame-level synchronization is not critical. Hardware-level synchronization is recommended for better accuracy in syncing and capturing moving objects since it offers precise frame-level synchronization.

Camera interface

When picking the right interface, the main criterion is bandwidth. For example, the USB 3.0 interface has a maximum theoretical bandwidth of 5Gbps. However, the practical bandwidth that can be achieved is based on the capabilities of the host system and the USB bus architecture. On the other hand, the MIPI CSI-2 interface can offer a bandwidth of up to 10 Gbps with four lanes. Hence, if your embedded vision application must capture high-resolution images at high frame rates, the MIPI interface should be your choice. Of course, the number of cameras that can be interfaced depends on the number of CSI ports supported by the host processor.

The choice of interface is also determined by other factors such as the distance to which data has to be transferred, the reliability of transfer, and the compatibility of your host platform. For instance, the MIPI interface can reliably transfer data up to a distance of 50cm. If you need to transfer data beyond that in a MIPI camera, the GMSL interface is recommended as it can transfer data up to a distance of 15 meters.

Host platform

The host platform is selected based on factors like processing power, form factor, and cost. If you are using a PC or x86-based host, the only practical interfaces are USB and ethernet. But, if you use an edge-based processor, you can use an interface like MIPI or GMSL due to their higher bandwidth. Remember that your host platform must smoothly process the data transferred by your cameras. The higher the resolution and frame rate of the images transferred, the better should be your platform’s processing power.

There is a wide variety of processors available in the market today, the NVIDIA Jetson series being the most popular and advanced. Others include NXP i.MX series, Qualcomm, Texas Instruments, etc.

Successfully executing a multi-camera integration project also involves a few other factors like:

- Picking the right camera sensor

- Optimizing for the lowest possible latency

- Ensuring the optimal camera placement or position

Flagship multi-camera solutions developed by e-con Systems

Given the complexity involved in multi-camera integration, it is always recommended to take help from an imaging expert like e-con Systems. We have implemented numerous multi-camera integrations for customers across industrial, retail, smart city, medical, and sports industries. One of our key differentiators is a thriving partner ecosystem with sensor manufacturers like Sony, Onsemi, and Omnivision. We are also an elite partner to NVIDIA – the leading edge processor provider in the market.

Some of our flagship multi-camera solutions are:

• STURDeCAM31 – an IP67-rated Full HD GMSL2 multi-camera solution with the HDR feature and based on the ISX031 sensor from Sony®.

• STURDeCAM20 – an IP67-rated Full HD GMSL2 multi-camera solution with the HDR feature and based on the AR0230 sensor from Onsemi.

• STURDeCAM25 – an IP67-rated Full HD GMSL2 color global shutter camera based on the AR0234 sensor from Onsemi.

• 180-degree stitching solution – three of e-con’s 13MP camera modules are connected to NVIDIA Jetson AGX Xavier with a proprietary stitching algorithm running on CUDA GPU.

• NileCAM81 – a 4K GMSL2 multi-camera solution with high sensitivity based on the AR0821 sensor from Onsemi

e-con Systems has made camera evaluation easy by offering readily available multi-camera solutions with 2-8 cameras in a single system. We also offer extensive customization services to make sure that the camera can be tailored to your unique application needs.

Please see our Camera Selector to check out our entire product portfolio. You can also write to camerasolutions@e-consystems.com if you need our expertise in integrating a multi-camera system into your applications.

Ranjith is a camera solution architect with over 16 years of experience in embedded product development, electronics design, and product solutioning. In e-con Systems, he has been responsible for building 100+ vision solutions for customers spanning multiple areas within retail including self service kiosks, access control systems, smart checkouts and carts, retail monitoring systems, and much more.