Advancements in machine learning, artificial intelligence, embedded vision, and processing technology have helped innovators build autonomous machines that have the ability to navigate an environment with little human supervision. Examples of such devices include AMRs (Autonomous Mobile Robots), autonomous tractors, automated forklifts, etc.

And making these devices truly autonomous requires them to have the ability to move around without any manual navigation. This in turn requires the capability to measure depth for the purposes of mapping, localization, path planning, and obstacle detection & avoidance. This is where depth sensing cameras come into play. Depth-sensing cameras enable machines to have a 3D perspective of their surroundings.

In this blog, we will learn what depth-sensing cameras are, the different types, and their working principle. We will also get to know the most popular embedded vision applications that use these new-age cameras.

Different Types of Depth-Sensing Cameras and Their Functionalities

Depth-sensing is the measuring of distance from a device to an object or the distance between two objects. A 3D depth-sensing camera is used for this purpose, which automatically detects the presence of any object nearby and measures the distance to it on the go. This helps the device or equipment integrated with the depth-sensing camera move autonomously by making real-time intelligent decisions.

Out of all the depth technologies available today, three of the most popular and commonly used ones are:

- Stereo vision

- Time of flight

- Direct Time-of-Flight (dToF)

- LiDAR

- Indirect Time-of-Flight (iToF)

- Direct Time-of-Flight (dToF)

- Structured light

Next, let us look at the working principle of each of the above-listed depth-sensing technologies in detail.

Stereo vision

A stereo camera relies on the same principle that a human eye works based on – binocular vision. Human binocular vision uses what is called stereo disparity to measure the depth of an object. Stereo disparity is the technique of measuring the distance to an object by using the difference in an object’s location as seen by two different sensors or cameras (eyes in the case of humans).

The image below illustrates this concept:

Figure 1 – stereo disparity

Figure 1 – stereo disparity

In the case of a stereo camera, the depth is calculated using an algorithm that usually runs on the host platform. However, for the camera to function effectively, the two images need to have sufficient details and texture. Owing to this, stereo cameras are recommended for outdoor applications that have a large field of view.

Stereo vision cameras are extensively used in medical imaging, AR and VR applications, 3D construction and mapping, etc.

To learn more about the working principle of stereo cameras, please read: What is a stereo vision camera?

Time of Flight Camera

Time of flight (ToF) refers to the time taken by light to travel a given distance. Time of flight cameras work based on this principle where the distance to an object is estimated using the time taken by the light emitted to come back to the sensor after reflecting off the object’s surface.

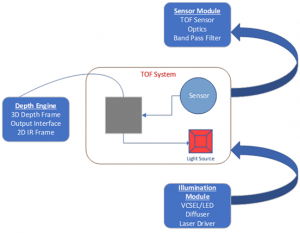

A time of flight camera has three major components:

- ToF sensor and sensor module

- Light source

- Depth sensor

The architecture of a time-of-flight camera is given below:

Figure 2 – architecture of a time of flight camera

Figure 2 – architecture of a time of flight camera

The sensor and sensor module are responsible for collecting the light data reflected from the target object. The sensor converts the collected light into raw pixel data. The light source used is either a VCSEL or LED that typically emits light in the NIR (Near InfraRed) region. The function of the depth processor is to convert the raw pixel data from the sensor into depth information. In addition, it also helps in noise filtering and provides 2D IR images that can be used for other purposes of the end application.

ToF cameras are commonly used in applications such as smartphones and consumer electronics, biometric authentication and security, autonomous vehicles and robotics, etc.

Based on the methods of determining the distances, ToF is of two types. Direct Time of Flight (DToF) and Indirect Time of Flight (iToF). Let us look into each of these in detail and their differences.

Direct Time of Flight (dToF)

Direct ToF method works by scanning the scene with pulses of invisible infrared laser light. The light reflected by the objects in the scene is analyzed to measure the distance.

The distance from the dToF camera to the object can be measured by computing the time period taken by the pulse of light from the emitter to reach the object in the scene and then back again. For this, the dToF camera uses a sensing pixel called a Single Photon Avalanche Diode (SPAD) The SPAD can detect the sudden spike in photons when the pulse of light is reflected back from the object. This way, it keeps track of the intervals of the photon spike and measures the time.

Continue reading the article by providing your company email ID

We respect your privacy. Your email will only be used for content access validation.

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.