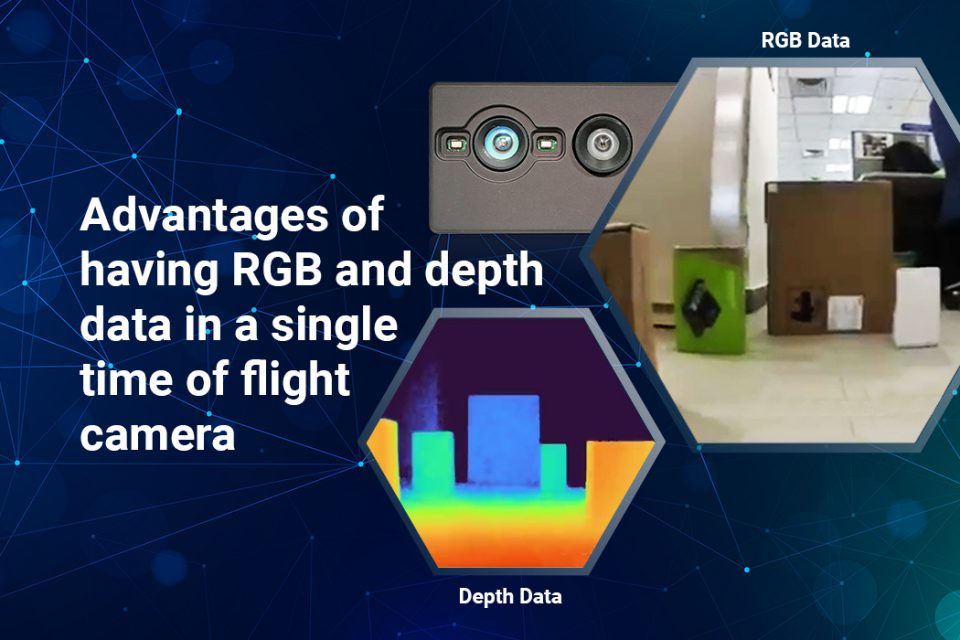

Time-of-flight cameras are one of the most popular depth-sensing technologies available in the market today. They use the light illumination method to measure depth for obstacle detection to enable autonomous navigation. Two of the most popular applications where a time of flight camera is used are autonomous mobile robots and autonomous guided vehicles. For the purpose of measuring depth, a time of flight camera has to only deliver the depth data of the scene. However, the ability to stream RGB data along with it offers certain advantages. In this article, we look at those advantages and some of the use cases or scenarios where you might need this feature.

Why don’t depth sensors capture color information by default?

Depth cameras measure the distance (or depth) to an object or surface. The most popular technologies for depth sensing are stereo cameras and time of flight cameras. While stereo cameras use stereo disparity to measure depth, LiDAR sensors and other time-of-flight cameras (LiDAR is a type of time of flight sensor) use the light illumination technique.

One of the key differences between LiDAR and other forms of time of flight sensors is that the former uses pulsed lasers to build a point cloud based on which a 3D map is constructed. On the other hand, the latter use light detection to create ‘depth maps’ typically using an embedded camera. In all these cases, color information is not required to calculate depth, and adding it would increase the computational load on the camera system as well. For these reasons, an ordinary depth camera does not come with the option to deliver color or RGB data.

How does having depth and RGB data in a single frame help?

As we discussed above, a depth camera – such as a time of flight camera – by default does not come with the ability to capture color information. However, in certain applications that require object recognition, this is a disadvantage. This is because in most cases, identifying the type of a target object requires RGB data. For instance, let us consider an autonomous tractor. It has to identify when anything is in the proximity of the PTO (Power Take-Off) Unit. For safety reasons, there has to be more caution in the case of a human versus an animal. This would need an action like the tractor stopping at a distance of a few meters from the human compared to a few centimeters in the case of an animal or an object. This in turn would require the camera system in the tractor to identify the type of the approaching object which is possible only by analyzing RGB data. Now that we understand the need for capturing RGB data in a time of flight camera, the next obvious question is why do we need both the depth and RGB data to be delivered in a single frame? The problem in capturing both the data in different frames is that you need to do processing that involves pixel to pixel matching of the two frames (depth frame and the color frame) to make sure you get the matching data – a pixel in the RGB frame has to be merged with the same pixel in the depth frame. This requires additional processing and achieving accuracy with it is always a challenge. Serving the two pieces of data in a single frame solves the problem in one go and helps to skip the above step.

Embedded vision applications that need both RGB and depth data in one frame

The need for having the two types of data in a single frame completely depends upon the end application. Let us take the same example of autonomous tractors. This feature would be required only if the tractor manufacturer is interested in identifying the object for improved safety. If the objective is to just detect an obstacle without recognizing the object, a normal depth camera or time of flight camera would do the job. Similar is the case with any other autonomous vehicle. Assuming that object recognition is required, the following are some of the other embedded vision applications that would require a combination of depth and RGB data in a single frame:

- Autonomous mobile robots such as cleaning robots, goods to person robots, service robots, and companion robots

- Robotic arms

- Autonomous guided vehicles

- People counting systems

e-con Systems’ time of flight camera with depth and RGB data in a single frame

e-con Systems – leveraging its experience of more than a decade in depth-sensing technologies – has developed DepthVista – a time of flight camera that delivers both the depth information as well as the RGB data in one frame for simultaneous depth measurement and object recognition. DepthVista comes with a combination of a CCD sensor (for depth measurement) and the ARO234 color global shutter sensor from Onsemi (for object recognition). The depth sensor streams a high resolution of 640 x 480 @ 30fps and the color global shutter sensor streams HD and FHD @30fps. This ability to read color and depth information in one go makes this camera suitable for applications that require both depth measurement and object recognition. To learn more about the features and applications of DepthVista, please visit the product page. Please check out the below video to learn more about the features and applications of DepthVista:

References:

- What are depth-sensing cameras? How do they work?

- How Time-of-Flight (ToF) compares with other 3D depth mapping technologies

- How does an Autonomous Mobile Robot use time of flight technology?

Hope this article gave you an understanding of why RGB and depth data can work together to enhance the ability of your autonomous vehicle to enable seamless obstacle detection and object recognition. If you are looking for help in integrating this time of flight camera into your machine or device, please write to us at camerasolutions@e-consystems.com. You could also visit the Camera Selector to have a look at our complete portfolio of cameras.

Vinoth Rajagopalan is an embedded vision expert with 15+ years of experience in product engineering management, R&D, and technical consultations. He has been responsible for many success stories in e-con Systems – from pre-sales and product conceptualization to launch and support. Having started his career as a software engineer, he currently leads a world-class team to handle major product development initiatives