NVIDIA® introduced Jetson Nano™ SOM, a low cost, small form factor, powerful and low power AI edge computing platform to the World at the GTC show (2019).

Jetson Nano™ SOM contains 12 MIPI CSI-2 D-PHY lanes, which can be either used in four 2-Lane MIPI CSI configuration or three 4-Lane MIPI CSI configuration for camera interfaces. But Jetson Nano™ development kit is limited to single 2-lane MIPI CSI-2 configuration.

e-con systems™ launched e-CAM30_CUNANO , a 3.4 MP 2-Lane MIPI CSI-2 custom lens camera board for NVIDIA® Jetson Nano™ development kit. This camera is based on 1/3″ inch AR0330 CMOS image sensor from ON Semiconductor® with 2.2 µm pixel.

e-CAM30_CUNANO is a multi-board solution, which has two boards as follows:

- Camera module (e-CAM30A_CUMI0330_MOD)

- Adaptor board (ACC_NANO_ADP)

The e-CAM30_CUNANO camera module has two 20-pin Samtec connectors (CN1 and CN2) for mating with e-CAM30_CUNANO adaptor board. This adaptor board supplies the required voltage for camera module. The e-CAM30_CUNANO adaptor board consists of 15-pin FFC connector (CN3), through which e-CAM30_CUNANO camera module is connected to Jetson Nano™ development kit using the FPC cable of 15cm length. e-CAM30_CUNANO connected to Jetson Nano™ development kit is shown below.

Fig 1 : e-CAM30_CUNANO connected to Jetson NanoTM Dev. Kit.

e-CAM30_CUNANO is exposed as a standard V4L2 device and customers can use the V4L2 APIs to access and control this camera. e-con Systems™ also provides ecam_tk1_guvcview sample application that demonstrates the features of this camera.

The following table lists the supported resolutions and frame rates of e-CAM30_CUNANO camera module.

| SI.No | Resolution | Frame Rate |

| 1 | VGA ( 640×480) | 60 |

| 2 | HD (1280×720) | 60 |

| 3 | FHD (1920×1080) | 60 |

| 4 | 3MP (2304×1296) | 45 |

| 5 | 3.4MP (2304×1536) | 38 |

Jetson Nano™ is supported to run wide variety of ML frameworks such as TensorFlow, PyTorch, Caffe/Caffe2, Keras, MXNet, and so on. These frameworks can help us to build autonomous machines and complex AI systems by implementing robust capabilities such as image recognition, object detection and pose estimation, semantic segmentation, video enhancement, and intelligent analytics.

Inspired from the “Hello, AI World ” NVIDIA® webinar, e-con Systems™ achieved success in running Jetson-inference engine with e-CAM30_CUNANO camera on Jetson Nano™ development kit.

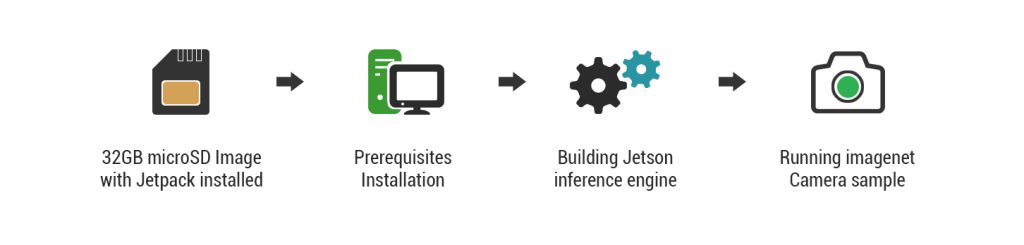

The process flow diagram to build and run the Jetson-inference engine on Jetson Nano™ is shown below.

Figure 2: Building and Running Jetson-inference Engine on Jetson Nano

Reference Link: https://github.com/dusty-nv/jetson-inference/blob/master/docs/building-repo.md

After installing the Jetson-inference engine, the list of examples available on Jetson Nano™ are as follows:

- detectnet–camera: Object detection with camera as input.

- detectnet–console: Object detection with image as input instead of camera.

- imagenet–camera: Image classification with camera as input.

- imagenet–console: Image classification with input image pre-trained on the ImageNet dataset.

- segnet–camera: Semantic segmentation with camera as input.

- segnet–console: Semantic segmentation with image as input.

Among the above listed Jetson-inference examples, e-con used imagenet-camera example to test image classification performance with e-con’s e-CAM30_CUNANO camera on Jetson NanoTM.

To run Jetson-inference engine for e-con e-CAM30_CUNANO camera, change the Jetson-inference source code as mentioned in below steps:

- Change the DEFAULT_CAMERA device node index with e-con camera video node index.

In line no 37 of imagenet-camera.cpp application, DEFAULT_CAMERA value will be -1 as mentioned below.

#define DEFAULT_CAMERA -1 // -1 for onboard camera, or change to index of /dev/video V4L2 camera (>=0)

bool signal_recieved = false

To work with e-con camera, change the DEFAULT_CAMERA value to 0 (that is, e-CAM30_CUNANO camera video node index enumerated) as mentioned below.

#define DEFAULT_CAMERA 0 // -1 for onboard camera, or change to index of /dev/video V4L2 camera (>=0)

bool signal_recieved = false

By default, camera streaming format is mentioned as YUY2 in utils/camera/gstCamera.cpp source as mentioned below.

#if NV_TENSORRT_MAJOR >= 5ss << “format=YUY2 ! videoconvert ! video/x-raw, format=RGB ! videoconvert !”;

#else

ss << “format=RGB ! videoconvert ! video/x-raw, format=RGB ! videoconvert !”;

#endif

But, e-con e-CAM30_CUNANO camera can stream only UYVY format.

2. Change the supported camera streaming format as UYVY in gstreamer pipeline of Jetson-inference engine as mentioned below.

#if NV_TENSORRT_MAJOR >= 5ss << “format=UYVY ! videoconvert ! video/x-raw, format=RGB ! videoconvert !”;

#else

ss << “format=RGB ! videoconvert ! video/x-raw, format=RGB ! videoconvert !”;

#endif

The e-CAM30_CUNANO camera used in applications as shown below.

Figure 3: User Applications

Below is the demo video of running Jetson-inference engine on Jetson Nano with e-CAM30_CUNANO camera.

4 comments

I have just received my Cam30 camera. I want to use it with my Jetson Nano to try on image recognition. However, I do not want to lose what I have running on the micro-sim for the past 6 months. Can I still operate the Cam30 camera? Do I have to modify the Kernel that I have been using/modified for other testing purposes?

You can refer to the upgrade procedure section in the developer guide document. In order to make the camera work, you need to modify the kernel, device tree and modules. Also, we have provided the kernel patch, device tree patch and module patch. You can integrate these patches in your current build and start evaluating the camera.

May I ask what you have modified in the existing kernel?

Also, if you have further queries kindly feel free to write a mail to techsupport@e-consystems.com. They will assist you.

I just got my e-CAM30 working with the viewer program, but when I try to run the python version of the jetson-inference examples, it doesn’t work. I’ve made the suggested change to gstCamera.cpp and tried running with either –camera=0 or –camera=/dev/video0, but the former fails with a gstreamer error, the latter freezes the Nano. Any suggestions for how to run the python examples with your camera?

Hi Philip Worthington,

As mentioned in blog, below listed changes has to be done in jetson-inference source code to run and test imagenet-camera sample for e-CAM30_CUNANO camera.

1. Setting DEFAULT_CAMERA camera macro in imagenet-camera/imagenet-camera.cpp to index of e-CAM30_CUNANO /dev/video V4L2 camera.

2. Updating camera streaming format in utils/camera/gstCamera.cpp source.

From your comments , we get to know you have successfully followed the second step.

But to mention device node ,instead of running with either –camera=0 or –camera=/dev/video0 options, kindly follow the first step to update e-CAM30_CUNANO camera device node in source code itself and rebuilt to test the jetson-inference example.