e-con Systems has been working on 3D camera technologies for more than a decade – starting with developing our first passive stereo camera. Since then, we have been exploring different ways of improving the generation of 3D data. In recent times, we have been rigorously exploring Time-of-Flight (ToF) technology – a new approach to 3D depth mapping.

Surprisingly, this technology has technically been around since the introduction of the lock-in CCD technique in the 1990s. However, it has only recently started to mature to meet the demands of non-mobile markets like industrial, retail, etc.

In this blog, we’ll explore the world of 3D depth mapping, its different types, how they work, and why ToF-based cameras are preferred over the rest.

What is 3D Depth Mapping?

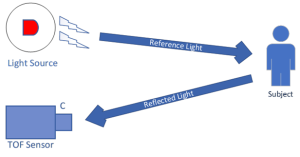

3D depth mapping, also known as depth sensing or 3D mapping, creates a three-dimensional view of a given space or object by measuring the distance between a sensor and various points in the environment. It involves projecting a light source onto the subject and capturing the reflected light with a camera or sensor.

The time or pattern of light reflection is then analyzed to calculate the distances to different parts of the scene, creating a depth map. Basically, the map is a digital representation that indicates how far away each part of the scene is from the point of view of the sensor.

As you may have heard, 3D depth mapping has been invaluable in helping several industries break new frontiers in innovation. After all, being equipped with 2D camera data these days can be restrictive for applications that require reliable data for making real-time decisions.

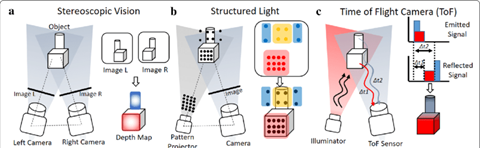

Before we compare Time-of-Flight with other 3D mapping technologies, let’s take a deeper look at one of its challengers – stereo vision imaging and structured light imaging.

Read: Everything you need to know about 3D mapping cameras

What is Stereo Vision Technology?

Human binocular vision is based on the depth being perceived by using stereo disparity, which is the difference in image location of an object seen by the left and right eye. Then, the brain uses this binocular disparity to extract depth information from the 2D retinal images (known as stereopsis).

Stereo vision technology mimics the human binocular vision system. Each camera records its view, and these differing images are then used to compute distances to objects within the scene.

The depth map is constructed by aligning two images to find matching features and measuring the difference in horizontal displacement (disparity) for these matches. The greater the disparity, the closer the object is to the viewer. It allows vision systems to interact with their surroundings more responsively, simulating human-like perception capabilities.

How do stereo vision cameras work?

Stereo vision cameras like e-con Systems’ Tara and TaraXL mimic this technique of human vision to perceive depth by using a geometric approach called triangulation. Some of the properties considered are:

- Baseline: It is the distance between the two cameras (about 50–75 mm – interpupillary distance).

- Resolution: It is directly proportional to depth. The higher the number of pixels to search, the higher the number of disparity levels (but with a higher computational load).

Focal length is directly proportional to depth. The lower the focal length, the farther we see—but with a reduced Field of View. The higher the focal length, the more near depth we see with a high Field of View.

After capturing two 2D images from different positions, stereo vision cameras enable correlation to create a depth image. So, stereo vision cameras are suitable for outdoor applications with a large field of view. However, both images must have sufficient details and texture or non-uniformity. It is also possible to add those details by illuminating the scene with structured lighting to achieve better quality.

Stereo vision cameras are deployed in use cases such as autonomous navigation systems, robotics, 3D reconstruction, and 3D video recording.

What is Structured Light Imaging?

Structured light involves using a light source (laser/LED) to provide a narrow light pattern onto the surface and detect distortions of illuminated patterns as a 3D image is geometrically reconstructed by the camera. Then, using triangulation, it scans several images and assesses the object’s dimensions, even if they are complex. Basically, this approach ensures that cameras can capture moving scenes from various perspectives before quickly building a 3D reconstruction.

How do structured light cameras work?

Structured light cameras approach 3D mapping by projecting light patterns onto objects and observing the distortion. This projected pattern is specifically designed to enhance the camera’s ability to discern and measure variations in the surface it illuminates.

When these patterns hit the surface of an object, they deform according to the object’s shape and spatial properties. Structured light cameras capture these patterns through their imaging sensors, which are positioned at a different angle from the light source. They can deduce the depth of the object with high accuracy by comparing the known pattern of the light projected and the pattern captured after it interacts with the 3D surfaces of the object.

The variations in pattern distortion are processed to calculate the distance from the camera to various points on the object’s surface, creating a 3D map of the object.

Some of the use cases of structured light cameras are industrial quality inspection, 3D scanning, gesture recognition, biometric security systems, and medical imaging.

What is Time-of-Flight Imaging?

Time-of-Flight (ToF) technology calculates the time it takes for light to travel to an object, reflect off of it, and return to the sensor. The measurement lets ToF cameras accurately determine the distance to various objects in a scene. The main components of a ToF camera include a ToF sensor and a sensor module, which capture and convert the reflected light into data that the camera can process.

They typically utilize a light source like VCSEL or LED that emits light in the near-infrared (NIR) spectrum. Alongside, a depth sensor works to process this raw data, filtering out noise and other inaccuracies to deliver clear depth information.

Read: What is a ToF sensor? What are the key components of a ToF camera?

How do ToF cameras work?

Time-of-Flight cameras operate by using a light detection method to measure depth. In a nutshell, “time of flight” refers to the duration it takes for a wave, particle, or object to traverse a distance within a medium. In the context of ToF cameras, they project a laser light to evaluate the distance to an object and use a sensor that is sensitive to this light’s wavelength to detect the light that bounces back.

Then, the sensor gauges the distance to the object based on the time difference between when the light is emitted and when the reflected light is received.

The below image showcases how ToF cameras measure the distance to a target object:

Popular use cases of Time-of-Flight cameras include AGVs, AMRs, pick and place robots, patient monitoring, and more.

Watch: Webinar on ToF cameras by e-con Systems

Watch: e-con Systems’ Time-of-Flight (ToF) camera live demo at Embedded Vision’22

Stereo Vision vs. Structured Light vs. Time-of-Flight (ToF)

Every embedded vision technology available for 3D image mapping has its own pros and cons. Let’s see how Time-of-Flight (ToF) cameras fare in comparison to the other stereo and 3D technologies – stereo vision and structured light.

Following is a pictorial representation of how the 3 stereo vision technologies work:

Now, let’s go through a detailed comparison by parameters such as cost, accuracy, depth range, low light performance, etc.

| STEREO VISION | STRUCTURED LIGHT | TIME-OF-FLIGHT | |

| Principle | Compares disparities of stereo images from two 2D sensors | Detects distortions of illuminated patterns by 3D surface | Measures the transit time of reflected light from the target object |

| Software Complexity | High | Medium | Low |

| Material Cost | Low | High | Medium |

| Depth(“z”) Accuracy | cm | um~cm | mm~cm |

| Depth Range | Limited | Scalable | Scalable |

| Low light | Weak | Good | Good |

| Outdoor | Good | Weak | Fair |

| Response Time | Medium | Slow | Fast |

| Compactness | Low | High | Low |

| Power Consumption | Low | Medium | Scalable |

Why Time-of-Flight (ToF) camera is a better choice for 3D mapping

Reduced software complexity: ToF cameras provide the depth data directly from the module – thereby avoiding complications like running depth matching algorithms in the host platform.

Higher imaging accuracy: ToF cameras provide better output in terms of image quality since they rely on accurate laser lighting.

More depth scalability: ToF cameras have a scalable depth range based on the number of VCSELs used for illumination.

Better low light performance: ToF cameras perform better in low-light conditions due to their active and reliable light source.

Compact-sized: ToF cameras boast of an impressive form factor with their compactness – attributed to the fact that the sensor and illumination can be placed together.

Applications Powered By Time-of-Flight Cameras

Autonomous Mobile Robots (Indoor/Outdoor)

Time-of-Flight cameras are highly favored in indoor and outdoor AMRs thanks to their ability to quickly measure distances and detect obstacles. This capability enhances the robot’s navigation and safety, allowing for smoother movement in complex environments.

Autonomous Guided Vehicles (AGVs)

Time-of-Flight cameras contribute to more reliable guidance systems. These cameras provide real-time data critical for the path-finding algorithms that AGVs use to navigate through warehouses and manufacturing facilities without human intervention.

Pick and place robots

Pick & place robots benefit from Time-of-Flight cameras as they require accurate and rapid depth information to manipulate objects. Whether the task involves assembling components or packaging goods, ToF cameras help these robots adjust their actions based on the accurate location and orientation of items.

Remote patient monitoring systems

Time-of-Flight cameras support remote patient monitoring systems by providing continuous, non-contact measurement of patient movements and vital signs. It enables effective monitoring without the need for physical interaction, which can be especially beneficial in telehealth applications.

Read: How does a Time-of-Flight camera make remote patient monitoring more secure?

Face recognition-based anti-spoofing devices

Time-of-Flight cameras are important for improving the security features of face recognition systems, especially in anti-spoofing devices. These cameras analyze depth data to help distinguish between genuine human faces and replicas, thus preventing unauthorized access through facial recognition spoofing.

e-con Systems & ToF cameras: What’s happening now?

e-con Systems, with over 20+ years of experience in delivering off-the-shelf and custom OEM cameras, has equipped several clients with cutting-edge Time-of-Flight imaging solutions. Our ToF cameras operate within the NIR spectrum (940nm/850nm), providing dependable 3D imaging in indoor or outdoor settings.

These cameras are equipped with built-in depth analysis, delivering instant real-time 2D and 3D data. Our camera range also comes with three interface options, namely USB, MIPI, and GMSL2. Additionally, we offer driver and SDK compatibility for NVIDIA Jetson AGX ORIN/AGX Xavier and X86-based systems.

Our ToF cameras include:

- DepthVista_MIPI_IRD – 3D ToF Camera for NVIDIA® Jetson AGX Orin™ / AGX Xavier™

- DepthVista_USB_RGBIRD – 3D ToF USB Camera

- DepthVista_USB_IRD – 3D ToF USB Camera

We also have over a decade of experience in working with stereo vision-based 3D camera technologies.

Our stereo vision cameras include:

Explore our Camera Selector Page to see e-con Systems’ full portfolio

If you need help integrating ToF or any other 3D mapping cameras into your embedded vision applications, please write to us at camerasolutions@e-consystems.com.

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.