The Qualcomm® QCS610 SoC is a high-performance IoT System-on-Chip (SoC) designed for developing advanced smart camera and IoT use cases encompassing machine learning, edge computing, sensor processing, voice UI enablement, and integrated wireless connectivity. It is engineered to deliver powerful computing for on-device camera processing and machine learning – with exceptional power and thermal efficiency – across a wide range of IoT applications. It integrates a powerful Image Signal Processor (ISP) and the Qualcomm® Artificial Intelligence (AI) engine, along with a heterogeneous compute architecture including a highly optimized custom CPU, GPU, and DSP for accelerated AI performance.

These features have encouraged many product developers to use QCS610 to develop embedded vision devices for the purpose of object detection. In this article, we will learn in detail how to use Qualcomm QCS610 to run an object detection AI algorithm. We will do this by understanding how to set up the QCS610 neural processing SDK for AI and running the tflite model.

Object detection using QCS610

To run an object detection application using the Qualcomm QCS610 SoC, you need to have a camera integrated with it. e-con Systems’ qSmartAI80_CUQ610 is a QCS610-based smart AI camera that can be readily used for doing this. It is a complete AI vision kit that comes integrated with the Sony STARVIS IMX415 sensor.

This vision kit is leveraging the Gstreamer pipeline to capture images for object detection. Also, it uses the TensorFlow Lite (tflite) model – a Deep Learning technique – that provides a set of tools to enable on-device machine learning. The Tensorflow Lite model allows developers to run their trained models on mobile, embedded, IoT devices and computers. It supports platforms such as embedded Linux, Android, iOS, and MCU.

Configuring the qSmartAI80_CUQ610 vision kit for object detection involves 2 steps:

- Installing and setting up the Qualcomm neural processing SDK for AI

- Running the tflite model on QCS610

Let us now look at each of these steps in detail.

1. How to install the QCS610 SDK

Qualcomm has its own Neural processing SDK for artificial intelligence (AI) which allows developers to convert neural network models trained in Caffe/Caffe2, ONNX or Tensorflow.

To install the SDK, your system must have the following configuration:

- Ubuntu 18.04

- Android SDK & Android NDK (android-ndk-r17c-linux-x86) – install via Andro Studio SDK Manager or as stand-alone

- Tensorflow and Tensorflow Lite installed

Setting up the Qualcomm Neural Processing SDK for AI

Following are the steps to be followed to install the Qualcomm Neural Processing SDK:

- Download the latest version of the SDK from the Qualcomm-Neural-Processing website.

- Install the SDK’s dependecies:

sudo apt-get install python3-dev python3-matplotlib python3-numpy python3- protobuf python3-scipy python3-skimage python3-sphinx wget zip

- Verify that all dependencies are installed:

~/snpe-sdk/bin/dependencies.sh

- Verify that the Python dependencies are installed:

source ~/snpe-sdk/bin/check_python_depends.sh

- Initialize the Qualcomm Neural Processing SDK environment:

cd ~/snpe-sdk/

export ANDROID_NDK_ROOT=~/Android/Sdk/ndk-bundle

- Environment setup for Tensorflow

Navigate to $SNPE_ROOT and run the following script to set up the SDK’s environment for TensorFlow:

source bin/envsetup.sh -t $TFLITE_DIR

Note: $TFLITE_DIR is the python tflite path.

- Train the images based on the required class labels in tflite.

- Finally, keep the files such as detect.tflite, labels.txt, configuration.config ready to be flashed into QCS610.

2. How to run the tflite model on QCS610

Running the tflite model on QCS610 involves the following steps:

- Connect the USB 3.0 Type-C cable to the development kit.

- Power ON the development kit and wait for a few seconds to boot up.

- Open the terminal in Linux PC.

- Push the tflite files into the development kit by the following command.

$ adb shell mkdir /data/DLC

$ adb push detect.tflite /data/DLC

$ adb push labelmap.txt /data/DLC

$ adb push mle_tflite.config /data/misc/camera

- Run the following commands to enter the adb shell.

$ adb root

$ adb shell

- Connect the external monitor through the HDMI port.

- Run the following command in the development kit terminal/console to configure the display, before running Gstreamer.

/ # export XDG_RUNTIME_DIR=/dev/socket/weston; weston --

tty=1 --idle-time=0 &

- Run the following gst-launch pipeline to provide a live preview on display.

/ # gst-launch-1.0 qtiqmmfsrc name=camsrc camera=0 ! video/x-raw\(memory:GBM\),format=NV12,width=1280,height=720,framerate=30/1, camera=0 ! qtimletflite config=/data/misc/camera/mle_tflite.config model=/data/DLC/detect.tflite labels=/data/DLC/labelmap.txt postprocessing=detection ! queue ! qtioverlay ! waylandsink fullscreen=true async=true sync=false

- The above process should run the tflite model in Qualcomm QCS610.

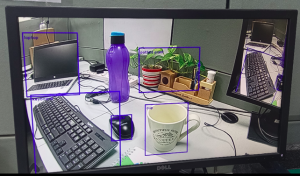

Sample output

The following image is a sample output received from the object detection application built using e-con Systems’ Qualcomm QCS610 based AI vision kit:

Figure 1 – Sample output of object detection model built using Qualcomm QCS610

Figure 1 – Sample output of object detection model built using Qualcomm QCS610

More about e-con Systems’ Qualcomm QCS610 AI vision kit

qSmartAI80_CUQ610 from e-con Systems is a smart AI vision kit based on Qualcomm QCS610 optimized for thermal and power efficiency. With its Sony STARVIS IMX415 sensor, the vision kit is suitable for low light and no light embedded vision applications. This complete vision kit also comes embedded with a carrier board which makes it a ready-to-use camera for testing and prototyping embedded vision applications. To learn more about this AI vision kit, please visit its product page.

Hope this article gave you an understanding of how to run an object detection model on the Qualcomm QCS610 SoC. If you are looking for help in integrating cameras into your embedded vision device, please write to us at camerasolutions@e-consystems.com.

You could also visit the Camera Selector to have a look at our complete portfolio of cameras.

Vinoth Rajagopalan is an embedded vision expert with 15+ years of experience in product engineering management, R&D, and technical consultations. He has been responsible for many success stories in e-con Systems – from pre-sales and product conceptualization to launch and support. Having started his career as a software engineer, he currently leads a world-class team to handle major product development initiatives