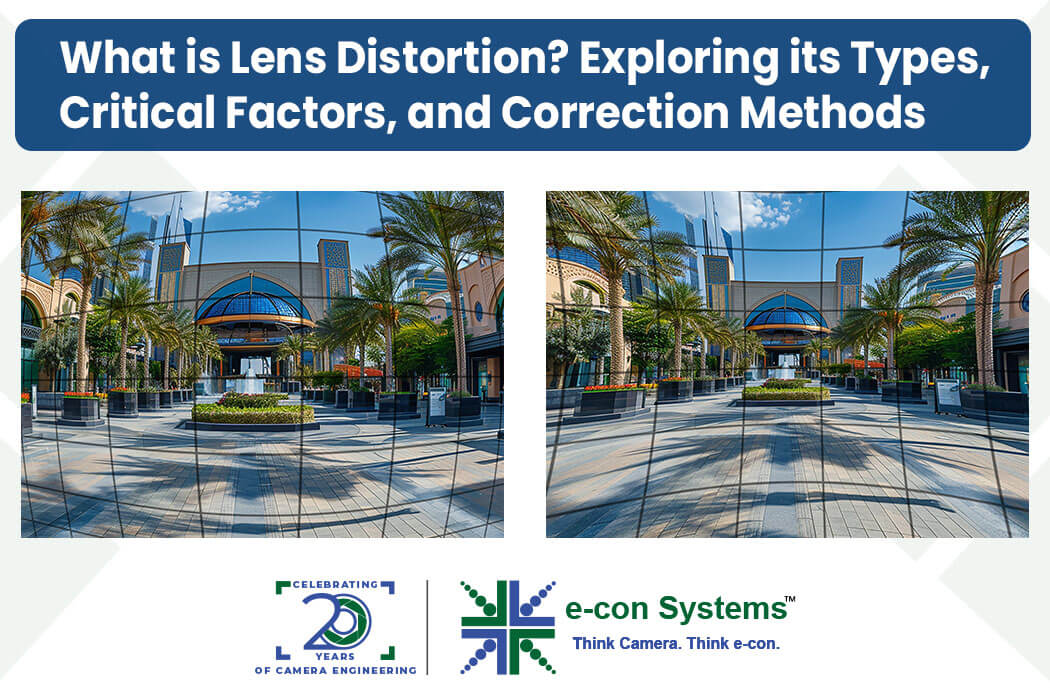

Have you ever noticed how straight lines sometimes appear curved near the edges of a wide-angle photo? That’s lens distortion at work! Distortion is a type of aberration that mainly affects the appearance of the light in the image formed.

Aberration is the behavior of light that deviates from the expected behavior (expected behavior of light: light rays ideally converge to a single point at the sensor to create a sharp image). Aberration happens due to a flaw in how a lens focuses light rays, which in turn causes distorted images.

Distortion does not cause much information loss compared to other aberrations. It mainly affects only the appearance of the image, which is why distortion is often referred to as a cosmetic aberration. Since there is no loss of information, it can be corrected using mathematical methods to a certain extent.

In this blog, we are exploring the causes of distortion, the types of distortion, and their relationship with factors such as wavelength and FOV.

How Does Distortion Happen?

To understand distortion, we must understand the process of refraction. Refraction is the bending of light due to a change in its speed as it travels from one medium to another. For example, light bends when it passes from air to glass. The angle of refraction (the angle at which the light continues in the new medium) relies on the refractive index of the medium it goes through.

Distortion happens due to refraction, which is a change in the path of the light when it passes through the lens.

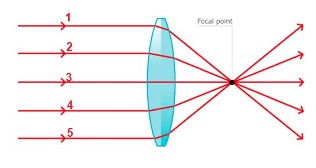

Figure 1: Refraction of Light

Refraction is the cause of distortion in images taken by cameras. When light rays pass through the lens, their path will differ due to refraction.

In Figure 1, the light rays undergo refraction while passing through the lens. The lens tries to converge the light rays at a single point called the ‘focal point.’ If we look at the light ray marked as ‘3’, which passes through the center of the lens, we can see that it undergoes a very minimal amount of refraction. Meanwhile, the light rays that pass through the ends of the lens, marked as ‘1’ and ‘5’, undergo the maximum amount of refraction.

We can infer from this that the refraction of light rays passing through the lens can vary depending on their path and the point on the lens that they hit.. Due to this phenomenon, sometimes the details that need to be converged at an expected point get converged at a different point (Refer to Figure 2)

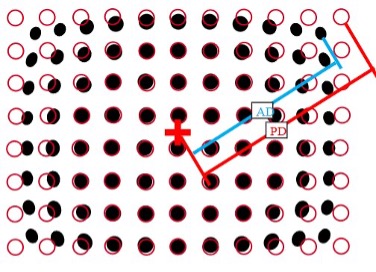

Figure 2: Distortion at the Corner of the Image

Figure 2: Distortion at the Corner of the Image

In the above figure, we can observe that in the corner of the images, the point black spots that need to be converged inside the red circle are displaced. Whereas, in the center of the image, the black spots are converged at the right position and are not misplaced.

This is because, as we go from the center of the lens to the corner, the light rays passing through them tend to have more distortion. That is why, in the center part, there is no misplacement of the image, and towards the corner/ends of the image, there is a perceivable amount of distortion.

Distortion vs. Other Aberrations

From the above information, we can infer that distortion is nothing but a geometrical aberration in which the details are misplaced from the expected position to somewhere else. As distortion is just the misplacement of information and not the loss of information, we can correct it. Distorted parts of the image can be mapped and removed using mathematical methods. Whereas, in other kinds of aberrations, such as chromatic aberration, information is lost and cannot be recreated.

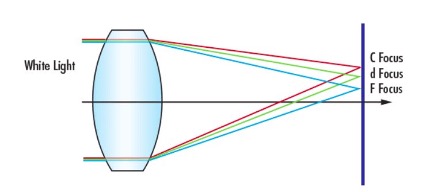

Figure 3: Lateral Chromatic Aberration

Figure 3: Lateral Chromatic Aberration

Figure 3 shows lateral chromatic aberration. In the figure, all the light rays must be focused at the ‘F focus.’ However, we can see that the light rays with green and red wavelengths are misplaced.

The pixel on which the green light is projected will already have its own pixel data. When the misplaced green light falls on the same position, the nature of the data at the particular position differs. While sampling these pixels, we get a smudged and inaccurate image. These kinds of distortions cannot be corrected since we cannot subtract the misplaced intensity information from the pixel because the intensity depends on a lot of factors, such as the scene being shot, color, and the distance between the camera lens and the object. So, we cannot determine the intensity that has to be subtracted from the particular pixel to remove chromatic aberration.

However, in case of distortion aberration, we can calculate the misplaced distance (Refer to Figure 2) and map it to remove the distortion. Even so, not all distortions can be removed from an image.

Loss of Information in Distortion

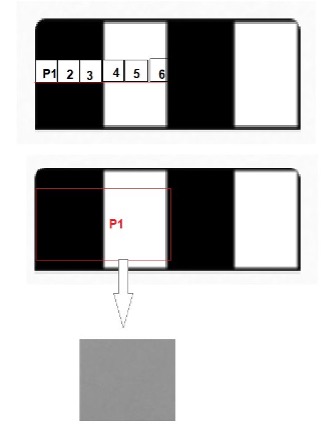

When distortion occurs due to the lens’ large FOV and a particular pixel might get converged with a large area, information is lost. Let us understand this better with the following example (Refer to Figure 4).

In Figure 4, the ‘A’ represents the ideal case, where the black line area is sampled with three pixels marked as P1,2, and 3 in the figure. The white line area is sampled with another three pixels marked as 4,5,6 in the figure. If, due to distortion, these pixel values are misplaced onto some other area of the image, say the next row of black and white lines, we could map it and correct it using mathematical methods.

Now, the ‘B’ in Figure 4 represents the distortion scenario where it cannot be corrected, and information is lost. We can see that the pixel P1 samples a very large area. The P1 pixel here samples both the white line and the black line area. The final sample of the pixel turns out to be grey in color. This way, the pixels represent the wrong information which is not present in the scene being captured. These kinds of distortions cannot be corrected using mathematical methods.

Figure 4: Loss of Information Due to Distortion

Figure 4: Loss of Information Due to Distortion

Distortion vs. Wavelength

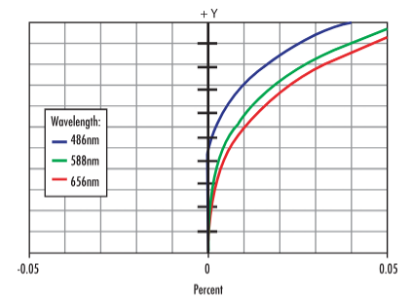

Distortion is usually perceived as a monochromatic phenomenon. However, distortion does vary with the wavelength of light. As the magnitude of refraction differs with respect to the wavelength of light, and as distortion is also caused primarily by refraction, distortion also depends on the wavelength of light.

Differences in distortion caused by different wavelengths of light are represented in terms of the magnitude of misplacement.

Figure 5: Distortion vs Wavelength of Light

Figure 5: Distortion vs Wavelength of Light

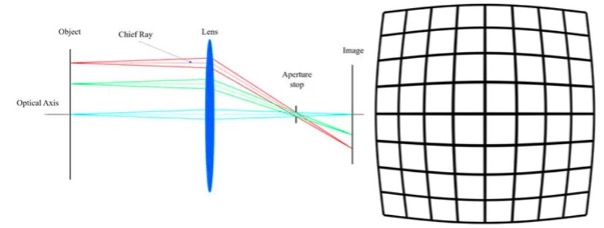

Distortion vs. FOV

A camera lens is a compound lens system built with different kinds of lenses, such as convex, concave, and flat lenses. The ultimate goal of these lenses is to converge the incoming light rays onto a single focus point. That’s why the magnitude of distortion increases as we go from the center of the lens to the corner of it. This is due to the fact that the refraction is high at the corners of the lenses as the lens is more curved, and the incident angle of the light rays also becomes higher. In turn, it results in more distortion at the corners of the images.

The rate of increase in distortion towards the corner depends upon the cubic field. That is, the rate of distortion varies in the cubic range.

Figure 5: Distortion Representation in Terms of Cubic Field

Figure 5: Distortion Representation in Terms of Cubic Field

Types of Distortion

- Barrel Distortion: In barrel distortion, the straight line appears to curve outward, resembling a barrel. It is normally caused when wide-angle lenses are used, in which the light rays from the edges of the frame are refracted more than those from the center.

Figure 6: Barrel Distortion

Figure 6: Barrel Distortion

- Pincushion Distortion: In this type of distortion, straight lines appear to be curved inward, resembling the shape of a pincushion. It commonly occurs in telephoto lenses, where the light rays from the centerof the frame are refracted more than those from the edges.

Figure 7: Pincushion Distortion

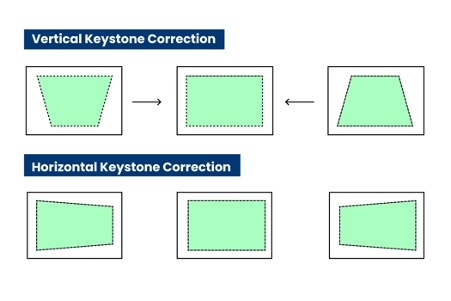

- Keystone Distortion: Keystone distortion occurs when the camera’s sensor plane is not parallel to the subject being photographed, resulting in converging or diverging vertical lines in the image.

Figure 8: Keystone Distortion

- Mustache Distortion: Mustache distortion is a combination of barrel and pincushion distortion, characterized by both inward and outward curvature along different sections of straight lines. It typically occurs in complex lens designs, particularly in certain wide-angle lenses.

Correcting Lens Distortion

Lens distortion can be controlled by manipulating the path of the light rays. Introducing additional lens elements and aligning them well can control distortion to some extent. However, this can lead to a more complex design of lens systems, which leads to more internal reflections, causing flare in the images (Refer to Figure 9).

In Figure 9, we can see that both lenses have an equal field of view. The first image has more distortion. In the second image, the distortion is reduced, but due to more lenses in the system, internal reflections occur, resulting in a flare in the images.

Figure 9: Lens Flare Introduced with Distortion Correction

Figure 9: Lens Flare Introduced with Distortion Correction

e-con Systems’ Cameras Help Tackle Imaging Artifacts

e-con Systems is an industry pioneer with 20+ years of experience in designing, developing, and manufacturing OEM cameras.

Our cameras are built with real-world applications in mind to tackle all kinds of imaging artifacts. They come equipped with fine-tuned ISPs to render the highest-quality output images.

e-con Systems also offers various customization services, including camera enclosures, resolution, frame rate, and sensors of your choice, to ensure our cameras fit perfectly into your embedded vision applications.

Visit e-con Systems’ Camera Selector Page to explore our wide range of cameras.

For queries and more information, email us at camerasolutions@e-consystems.com.

FAQs

- Does the human eye have distortion?

Yes, human eyes can have slight distortion! The curved shape and uneven density create a warped image on the retina. But our brain cleverly corrects for this distortion, giving us a clear picture of the world.

- How does focal length affect distortion?

Wide-angle lenses (short focal length) tend to exaggerate distortion, bending straight lines outward (barrel distortion). Telephoto lenses (longer focal length) cause less distortion, and can even compress the perspective, making distant objects appear closer together.

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.