Key Takeaways

- Why multi-sensor timing drift weakens edge AI perception

- How GNSS-disciplined clocks align cameras, LiDAR, radar, and IMUs

- Role of Orin NX as a central timing authority for sensor fusion

- Operational gains from unified time-stamping in autonomous vision systems

Autonomous vision systems deployed at the edge depend on seamless fusion of multiple sensor streams (cameras, LiDAR, Radar, IMU, and GNSS) to interpret dynamic environments in real time. For NVIDIA Orin NX-based platforms, the challenge lies in merging all the data types within microseconds to maintain spatial awareness and decision accuracy.

Latency from unsynchronized sensors can break perception continuity in edge AI vision deployments. For instance, a camera might capture a frame before LiDAR delivers its scan, or the IMU might record motion slightly out of phase. Such mismatches produce misaligned depth maps, unreliable object tracking, and degraded AI inference performance. A sensor fusion system anchored on the Orin NX mitigates this issue through GNSS-disciplined synchronization.

In this blog, you’ll learn everything you need to know about the sensor fusion architecture, why the unified time base matters, and how it boosts edge AI vision deployments.

What are the Different Types of Sensors and Interfaces?

| Sensor | Interface | Sync Mechanism | Timing Reference | Notes |

| GNSS Receiver | UART + PPS | PPS (1 Hz) + NMEA UTC | GPS time | Provides absolute time and PPS for system clock discipline |

| Cameras (GMSL) | GMSL (CSI) | Trigger derived from PPS | PPS-aligned frame start | Frames precisely aligned to GNSS time |

| LiDAR | Ethernet (USB NIC) | IEEE 1588 PTP | PTP synchronized to Orin NX | Time-stamped point clouds |

| Radar | Ethernet (USB NIC) | IEEE 1588 PTP | PTP synchronized to Orin NX | Time-stamped detections |

| IMU | I²C | Polled; software time stamp | Orin NX system clock (GNSS-disciplined) | Short-range sensor directly connected to Orin |

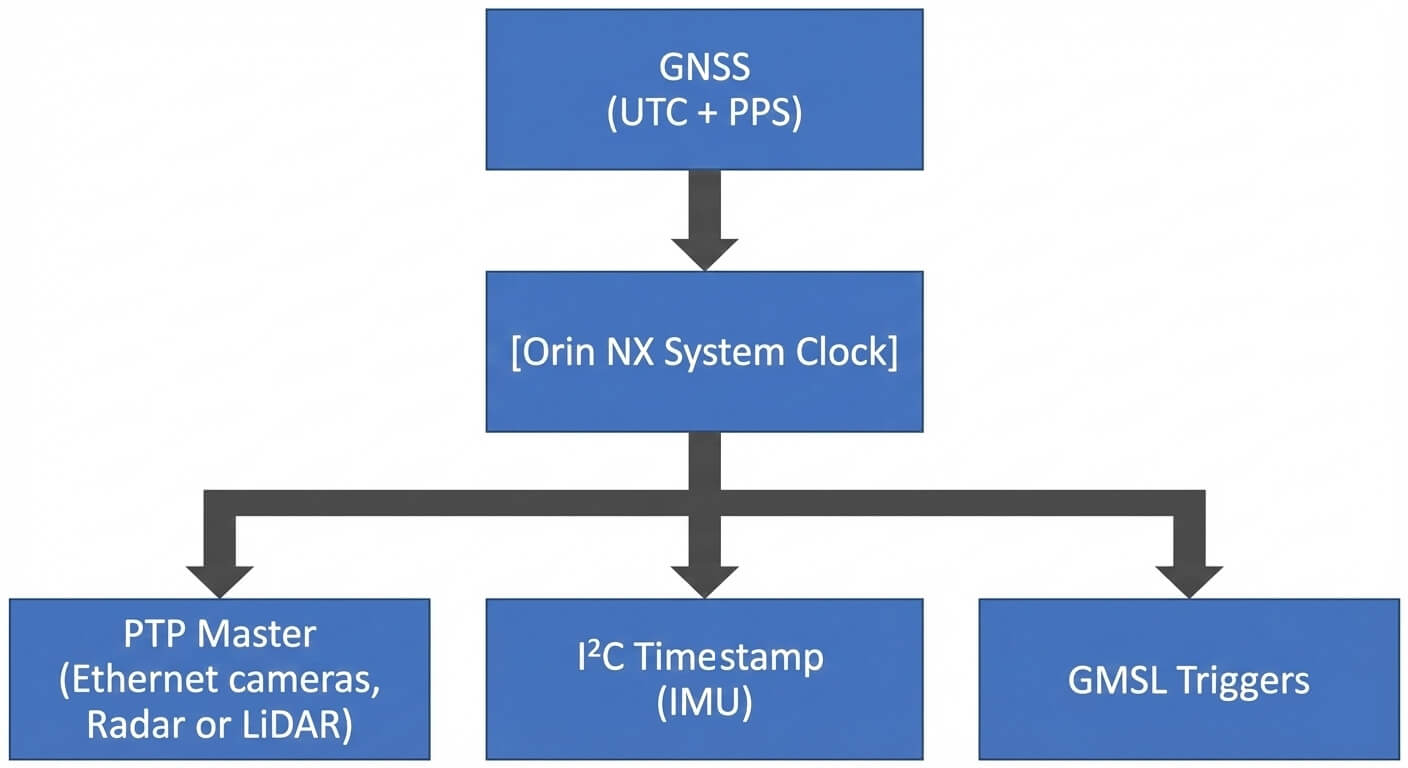

Coordinating Multi-Sensor Timing with Orin NX

Edge AI systems rely on timing discipline as much as compute power. The NVIDIA Orin NX acts as the central clock, aligning every connected sensor to a single reference point through GNSS time discipline.

The GNSS receiver sends a Pulse Per Second (PPS) signal and UTC data via NMEA to the Orin NX, which aligns its internal clock with global GPS time. This disciplined clock becomes the authority across all interfaces. From there, synchronization extends through three precise routes:

- PTP over Ethernet: The Orin NX functions as a PTP Grandmaster through its USB NIC. LiDAR and radar units operate as PTP slaves, delivering time-stamped point clouds and detections that stay aligned to the GNSS time domain.

- PPS-derived camera triggers: Cameras linked via GMSL or MIPI CSI receive frame triggers generated from the PPS signal. This ensures frame start alignment to GNSS time with zero drift between captures.

- Timed IMU polling: The IMU connects over I²C and is polled at consistent intervals, typically between 500 Hz and 1 kHz. Software time stamps are derived from the same GNSS-disciplined clock, keeping IMU data in sync with all other sensors.

Importance of a Unified Time Base

All sensors share the same GNSS-aligned time domain, enabling precise fusion of LiDAR, radar, camera, and IMU data.

Implementation Guidelines for Stable Sensor Fusion

- USB NIC and PTP configuration: Enable hardware time-stamping (ethtool -T ethX) so Ethernet sensors maintain nanosecond alignment.

- Camera trigger setup: Use a hardware timer or GPIO to generate PPS-derived triggers for consistent frame alignment.

- IMU polling: Maintain fixed-rate polling within Orin NX to align IMU data with the GNSS-disciplined clock.

- Clock discipline: Use both PPS and NMEA inputs to keep the Orin NX clock aligned to UTC for accurate fusion timing.

Strengths of Leveraging Sensor Fusion-Based Autonomous Vision

Direct synchronization control

Removing the intermediate MCU lets Orin NX handle timing internally, cutting latency and eliminating cross-processor jitter.

Unified global time-stamping

All sensors operate on GNSS time, ensuring every frame, scan, and motion reading aligns to a single reference.

Sub-microsecond Ethernet alignment

PTP synchronization keeps LiDAR and radar feeds locked to the same temporal window, maintaining accuracy across fast-moving scenes.

Deterministic frame capture

PPS-triggered cameras guarantee frame starts occur exactly on the GNSS second, preventing drift between visual and depth data.

Consistent IMU data

High-frequency IMU polling stays aligned with the master clock, preserving accurate motion tracking for fusion and localization.

e-con Systems Offers Custom Edge AI Vision Boxes

e-con Systems has been designing, developing, and manufacturing OEM camera solutions since 2003. We offer customizable Edge AI Vision Boxes powered by NVIDIA Orin NX and Orin Nano. It brings together multi-camera interfaces, hardware-level synchronization, and AI-ready processing into one cohesive unit for real-time vision tasks.

Our Edge AI Vision Box – Darsi simplifies the adoption of GNSS-disciplined fusion in robotics, autonomous mobility, and industrial vision. It comes with support for PPS-triggered cameras, PTP-synced Ethernet sensors, and flexible connectivity options. It also provides an end-to-end framework where developers can plug in sensors, train models, and run inference directly at the edge (without external synchronization hardware).

Know more -> e-con Systems’ Orin NX/Nano-based Edge AI Vision Box

Use our Camera Selector to find other best-fit cameras for your edge AI vision applications.

If you need expert guidance for selecting the right imaging setup, please reach out to camerasolutions@e-consystems.com.

FAQs

- What role does sensor fusion play in edge AI vision systems?

Sensor fusion aligns data from cameras, LiDAR, radar, and IMU sensors to a common GNSS-disciplined time base. It ensures every frame and data point corresponds to the same moment, thereby improving object detection, 3D reconstruction, and navigation accuracy in edge AI systems.

- How does NVIDIA Orin NX handle synchronization across sensors?

The Orin NX functions as both the compute core and timing master. It receives a PPS signal and UTC data from the GNSS receiver, disciplines its internal clock, and distributes synchronization through PTP for Ethernet sensors, PPS triggers for cameras, and fixed-rate polling for IMUs.

- Why is a unified time base critical for reliable fusion?

When all sensors share a single GNSS-aligned clock, the system eliminates time-stamp drift and timing mismatches. So, fusion algorithms can process coherent multi-sensor data streams, which enable the AI stack to operate with consistent depth, motion, and spatial context.

- What are the implementation steps for achieving stable sensor fusion?

Developers should enable hardware time-stamping for PTP sensors, use PPS-based hardware triggers for cameras, poll IMUs at fixed intervals, and feed both PPS and NMEA inputs into the Orin NX clock. These steps maintain accurate UTC alignment through long runtime cycles.

- How does e-con Systems support developers building with Orin NX?

e-con Systems provides customizable Edge AI Vision Boxes powered by NVIDIA Orin NX and Orin Nano. They are equipped with synchronized camera interfaces, AI-ready processing, and GNSS-disciplined timing. Hence, product developers can deploy real-time vision solutions quickly and with full temporal accuracy.

Prabu is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.