What you will learn:

- How cameras detect drowsiness, distraction, object presence, and posture to prevent accidents

- Why features like IR/NIR sensitivity, wide FoV, and global shutter are necessary for accurate monitoring across conditions

- Why interface choices determine low-latency integration within vehicle architectures

- How flexible mounting positions and compact form factors ensure seamless integration

The reality is that driver-assistance systems are fast evolving. Hence, understanding what the driver is doing has become necessary for system response. That’s why cameras are placed inside the vehicle cabin – to be the primary source of this understanding. These embedded cameras track the driver’s eye movement, head position, facial orientation, and sometimes even hand gestures.

So, if a driver begins to nod off or glance away for extended periods, the system can trigger alerts, audio cues, or even adjust the vehicle’s response. If the driver fails to respond altogether, some vehicles initiate automatic braking or lane correction.

In this blog, you’ll understand more about how in-cabin monitoring cameras work through their primary use cases and learn about all the imaging features they need.

Use Cases of In-Cabin Monitoring Cameras

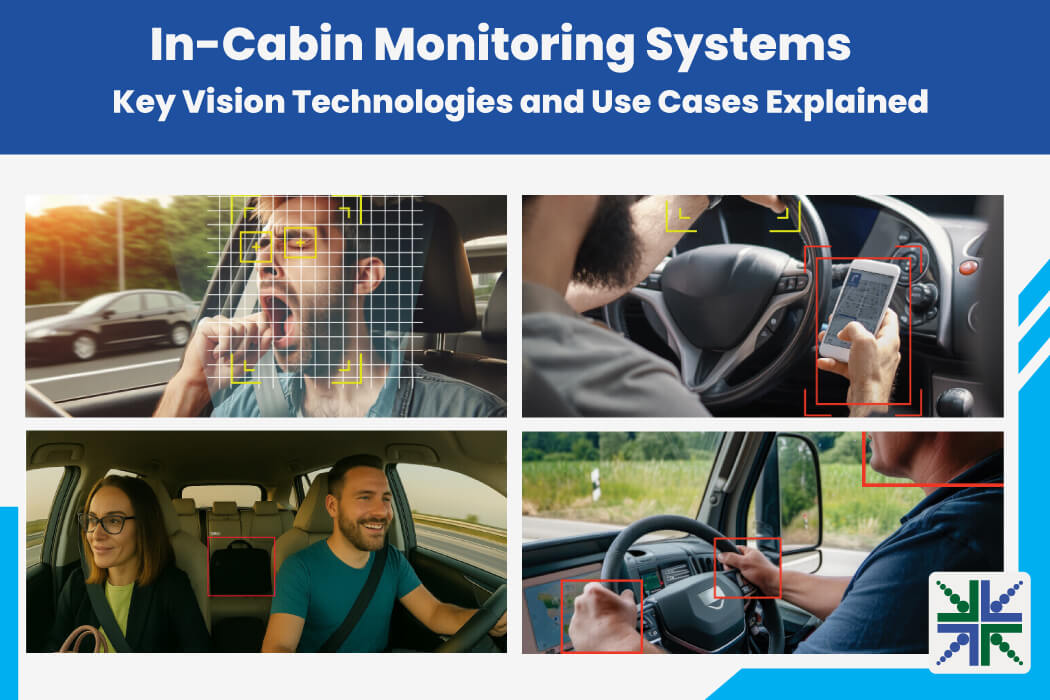

Drowsiness detection

Falling asleep while driving can lead to accidents within seconds. In-cabin monitoring cameras focus on eye behavior, head tilt, and gaze fixation to detect these patterns early. The system builds a real-time profile of the driver’s alertness by measuring blink rate, eyelid closure, and duration of visual engagement.

Distracted driving

Distracted driving

Looking away from the road can break attention loops. In-cabin cameras detect distraction by analyzing head orientation, gaze direction, and upper-body movement. If the driver consistently turns away from the windshield or dashboard, the system responds with escalating alerts.

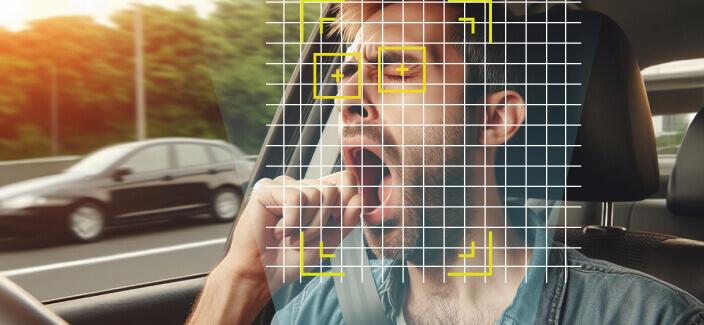

Object detection

Object detection

Object detection systems distinguish between living occupants and inanimate objects. It becomes critical when seatbelts are triggered, airbags are deployed, or driver prompts are issued. Hence, cameras must help recognize shapes, textures, and reflectivity signatures.

Body posture

Body posture

If a driver slouches, leans excessively, or turns to the rear, the system flags reduced driving control. For passengers, posture data contributes to accurate airbag deployment and seatbelt tensioning. Hence, cameras must interpret full-body behavior in real time.

Top Imaging Features of In-Cabin Monitoring Cameras

Top Imaging Features of In-Cabin Monitoring Cameras

IR illumination and NIR sensitivity

Cabin lighting conditions can shift quickly, from bright daylight to dim evening commutes, making it hard to detect subtle cues like pupil movement or eyelid closure. Hence, in-cabin cameras rely on IR illumination. IR LEDs emit light invisible to the human eye but detected by NIR-sensitive sensors. This enables continuous observation across ambient light levels.

The in-cabin monitoring camera’s sensor must also have strong NIR sensitivity to accurately capture features such as pupils, eyebrows, and skin tone under IR illumination. It delivers stable, high-contrast imaging during night drives or in shadowed environments, while removing the need for mechanical filters that alternate between IR and RGB.

Wide Field of View

The interior of a cabin is not a flat plane. The camera must capture facial expressions, head movements, hand gestures, and upper-body shifts: all from a fixed mount point. A narrow lens would miss critical parts of the scene, especially in large vehicles or flexible seating layouts.

A wide Field of View (FoV) ensures that a single camera can observe the full driver region, and sometimes passenger areas as well. It increases system reliability while reducing the need for multiple cameras or moving mounts.

Global shutter

Drivers move and so do steering wheels, dashboard reflections, and handheld devices. In such environments, image capture must remain accurate even when the scene contains fast motion. Rolling shutter sensors may create skewed images under motion, which can mislead detection algorithms.

Global shutter sensors eliminate this issue by capturing the entire frame in a single instant. They help avoid motion blur, frame distortion, or geometric drift, especially important for eye tracking, gesture classification, and driver attention monitoring.

GMSL2 vs. MIPI interface

In-vehicle wiring must deal with EMI, space constraints, and long cable routing. Standard USB or ribbon interfaces struggle with these demands. GMSL2 (Gigabit Multimedia Serial Link 2) solves this by providing high-speed data transfer over coaxial cables up to 15 meters. It supports data, power, and control over a single line. It also enables seamless integration with NVIDIA Jetson-based platforms.

MIPI (Mobile Industry Processor Interface) fits a different niche. It works best for compact, standalone camera modules where the sensor sits close to the processor. Its direct, low-latency link makes it ideal for setups that prioritize speed over distance and when minimal delay and a small footprint are critical.

RGB-IR vs. monochrome

RGB-IR cameras capture both color and infrared imagery, enabling in-cabin systems to maintain accurate driver and passenger monitoring in all lighting conditions. During the day, they provide detailed color images, while at night they switch to infrared mode without the need for extra lighting adjustments.

Monochrome IR cameras, by contrast, are built solely for infrared capture, offering stronger low-light performance and higher contrast when color data is unnecessary.

Mounting options

In-cabin cameras can be positioned in multiple locations to achieve optimal coverage. Windshield-mounted units give a broad, elevated view of both driver and passenger areas. Steering column-mounted cameras deliver a direct angle for precise tracking of eye and head movements. A-pillar-mounted cameras offer a side perspective that complements other viewpoints, improving overall monitoring accuracy.

The choice of mounting position depends on the vehicle’s interior layout, safety regulations, and desired field of view. In some deployments, multiple mounting points are combined to ensure redundancy and reduce blind spots.

Form factor

The cabin offers limited space for hardware. Cameras must fit inside tight enclosures, behind mirrors, in dashboards, or on the steering column. Large units block visibility or interfere with driver comfort. Size, weight, and mounting flexibility become critical design considerations.

Compact, lightweight camera modules with side, top, or rear exit connectors improve integration. Developers can also choose between bare-board versions for custom enclosures or enclosed versions for direct mounting.

High-Performance Mobility Cameras by e-con Systems

Since 2003, e-con Systems has been designing, developing, and manufacturing high-performance OEM cameras, including those that meet the demands of real-time in-cabin vision. These cameras support next-generation driver monitoring systems across commercial fleets, electric vehicles, trucks, and passenger cars.

With experience in deploying across automotive environments, our cameras combine compact design, infrared support, global shutter sensors, and GMSL2 interfaces to meet system-level goals without compromise.

Explore all our in-cabin monitoring cameras

Use our Camera Selector to browse through e-con Systems’ full portfolio.

If you need to find and deploy the perfect in-cabin monitoring camera, please write to camerasolutions@e-consystems.com.

FAQs

- What is the difference between NIR imaging and standard visible light imaging in in-cabin systems?

NIR (Near-Infrared) imaging enables cameras to operate in complete darkness using infrared LEDs, while visible light imaging depends on ambient illumination. In-cabin systems use NIR to track driver behavior consistently during night drives, tunnel crossings, or when cabin lights are off. - Why is a global shutter sensor preferred for driver monitoring applications?Global shutter sensors capture the entire frame in one instant, preventing motion blur or distortion. This is crucial in driver monitoring because even quick eye or head movements must be recorded accurately to assess alertness or distraction.

- How does GMSL2 improve in-cabin camera integration?GMSL2 supports high-speed video, power, and control signals over a single coaxial cable, reducing wiring complexity. It also provides electromagnetic shielding and long-range support, which are important for placing cameras inside vehicles without signal loss or latency.

- Can one in-cabin camera cover both driver and passenger monitoring?Yes, if the camera has a wide Field of View and sufficient resolution, it can monitor both the driver and front passenger. It is commonly used in shared mobility, airbag control, and child presence detection systems.

- What platforms are compatible with e-con Systems’ in-cabin monitoring cameras?e-con Systems’ in-cabin cameras are validated on NVIDIA Jetson platforms such as AGX Orin and Xavier. They also support integration with automotive-grade processors from vendors like NXP and Qualcomm, depending on the required interface and feature set.

Suresh Madhu is the product marketing manager with 16+ years of experience in embedded product design, technical architecture, SOM product design, camera solutions, and product development. He has played an integral part in helping many customers build their products by integrating the right vision technology into them.