Advanced embedded vision systems have been enabling innovative ways for the life sciences industry to seamlessly carry out mission-critical processes. For instance, the recent trends in the hematology field gave rise to automated hematology analyzers, which rapidly analyze whole blood specimens for the Complete Blood Count (CBC). Results include Red Blood Cell (RBC) count, White Blood Cell (WBC) count, platelet count, hemoglobin concentration, hematocrit, RBC indices, and leukocyte differentials.

To carry out these types of blood analyses, specimens have to be collected using what is called a blood collection tube. Blood collection tubes also help in preparing the sample the right way to make it ready for testing and analysis. These tubes come in different types, and classifying them for appropriate usage is a very mundane and repetitive task, and hence can be automated with the help of embedded cameras. But using camera-enabled systems to accurately carry out this task is easier said than done.

Discover the three key challenges of leveraging embedded vision technology to classify blood collection tubes, how to overcome them practically, and whether to select cloud AI or edge AI for processing and analysis. Also, find out how e-con Systems’ e-CAM512_USB using NXP’s RT 1170 MCU – an edge AI camera with advanced AI capabilities – can be a game-changer for your application.

Challenges of classifying blood collection tubes

There are different types of blood collection tubes, which come in different colors. Hence, the system must be able to add new colored blood collection tubes with a minimal amount of time and effort. However, it’s just one of the challenges that you may face, as we found out while working closely with a leading life sciences solution provider. We will be learning how to overcome these challenges in detail by keeping this customer project as a reference.

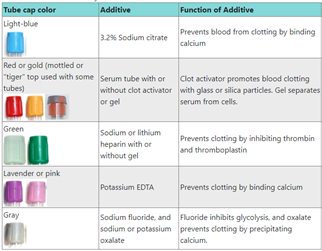

First, let’s look at the various types of blood collection tubes.

Figure 1 – Types of blood collection tubes (source: LabCE)

Figure 1 – Types of blood collection tubes (source: LabCE)

Our client required the analyzers to be able to differentiate multiple blood collection tubes and analyze accordingly. The three challenges we faced during the implementation were:

- Skewness in blood collection tube position.

- Change in lighting condition.

- Multiple colors.

Skewness in blood collection tube position

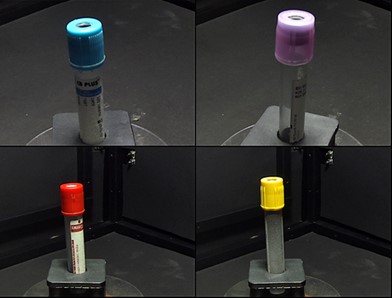

Since a blood collection tube placed in the stand will not always be straight, there may be some skewness in the placement. This can be seen in the below images:

Figure 2 – Skewness in the placement of blood collection tubes

Figure 2 – Skewness in the placement of blood collection tubes

So, the training dataset should also address this randomness in the skew. Otherwise, the blood collection tubes will not be classified correctly during the inference.

We addressed this challenge by introducing random rotation in the dataset. Using data augmentation, we ensured that the model could adapt to the randomness in skew.

Some samples after data augmentation are shown below:

Figure 3 – Blood collection tubes after data augmentation for skew

Figure 3 – Blood collection tubes after data augmentation for skew

As you can see, the model can detect blood collection tubes at different angles. This was extremely advantageous for the client.

Changes in lighting condition

It quickly became apparent that the environment lighting would not be the same in all scenarios. We also noticed that this change could happen if the environment is exposed to natural lighting or exposure change in the camera, or the change in the environment itself.

So, we had to train the model in order to adapt to different lighting conditions. We did so by introducing a change in the brightness level in the dataset by using data augmentation.

Some samples after data augmentation for varying lighting are shown below:

Figure 4 – Blood collection tubes after data augmentation for varying lighting

Figure 4 – Blood collection tubes after data augmentation for varying lighting

This data augmentation enabled the model to detect blood collection tubes across different lighting conditions.

Multiple colors

As earlier mentioned, there are multiple types of blood collection tubes in different colors. And it is of utmost importance to add new colored blood collection tubes without spending excessive time and effort. This is where transfer learning came into play.

As the model is already trained to detect features required to classify the blood collection tubes, we took it a step further by retaining the weights and biases of the feature extraction layers – re-training only the top layers with the new dataset. By doing so, we didn’t need the model to be trained from scratch – therefore, saving a significant amount of time and effort.

The most common incarnation of transfer learning in the context of deep learning is the following workflow:

- Take layers from a previously trained model.

- Freeze them – avoid destroying any of the information they contain during future training rounds.

- Add some new, trainable layers on top of the frozen layers – they will learn to turn the old features into predictions on a new dataset.

- Train the new layers on your dataset.

A last (and optional step) is fine-tuning, which consists of unfreezing the entire model you obtained above (or part of it) and re-training it on the new data with a very low learning rate. This can potentially achieve meaningful improvements by incrementally adapting the pre-trained features to the new data.

Cloud AI vs. Edge AI: Which one’s more suitable?

The differentiation of multiple blood collection tubes can be done using a classic Machine Learning (ML) problem known as image classification. This again raises the question of whether the inferencing should be done on the cloud (Cloud AI) or at the edge (Edge AI).

It is crucial for developers to consider the following to determine this:

- Latency: It refers to the time taken from the point a request is sent to when a response is received. Cloud AI architecture is fast but not enough for applications like automated hematology analyzers that need real-time responses.

- Connectivity: Automated hematology analyzers can’t afford to have downtimes because this would jeopardize the results. Even a brief lack of connectivity can lead to malfunctioning. Hence, they need real-time processing and continuous connectivity –provided by edge AI.

- Security: Edge devices provide better security and privacy since data is stored on-site, and it doesn’t need to send all the data to the cloud. Certain applications that involve delicate information like medical records are best done locally for security and privacy concerns.

- Energy consumption: When the blood analyzer sends a small amount of data to the cloud, the power drain of network communications is reduced. However, increasing the computational load with ML will increase the power drain, potentially offsetting your power savings.

- Costs: With high volumes of transactions in the cloud, the lifetime cloud communication cost for the device increases as well.

Considering all the above factors, edge AI is best suited to meet the application requirements of a camera-enabled blood classification system.

e-con Systems’ camera solution to classify blood collection tubes

Recently, e-con Systems developed an edge AI camera named e-CAM512_USB using NXP’s RT 1170 MCU – with advanced AI capabilities.

e-CAM512_USB is a 5MP USB 2.0 UVC color camera with an S-mount lens holder. It is a two-board solution containing a camera sensor board based on the 1/2.5″ AR0521 image sensor from onsemi® and a USB 2.0 Interface board. The powerful onboard ISP brings out the best image quality of this 2.2-micron pixel 5MP AR0521 CMOS image sensor – making it ideal for classifying blood collection tubes.

Also, using a custom-curated dataset, e-con has developed a tailor-made CNN model to classify blood collection tubes. The benchmarking of the custom model, state-of-art models, and inferencing time for various ML frameworks will be shared in the upcoming blogs.

If you are interested in integrating this cutting-edge AI-driven camera into your life sciences or medical application, please write to us at camerasolutions@e-consystems.com. You can also visit the Camera Selector to see our complete portfolio.

Balaji is a camera expert with 18+ years of experience in embedded product design, camera solutions, and product development. In e-con Systems, he has built numerous camera solutions in the field of ophthalmology, laboratory equipment, dentistry, assistive technology, dermatology, and more. He has played an integral part in helping many customers build their products by integrating the right vision technology into them.