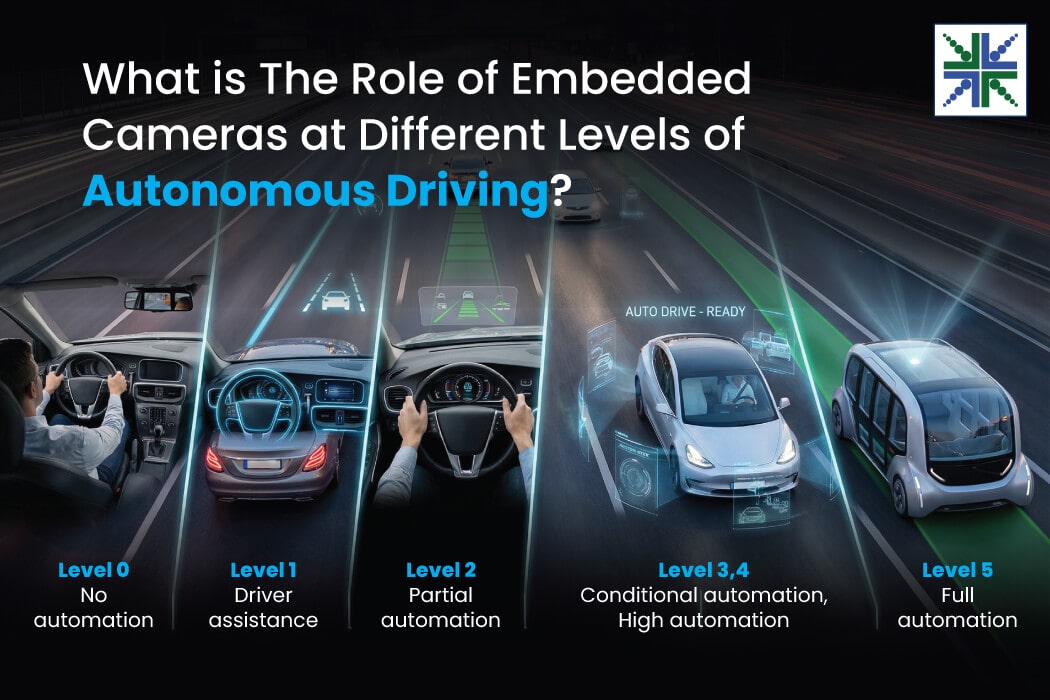

Autonomous driving develops in stages, each measured by how much of the task is handled by the vehicle instead of the person. These stages depend on perception, which refers to the ability to notice what’s happening on the road and respond to it.

Cameras make that possible by equipping autonomous systems with AI vision. They capture the raw visual data that algorithms use to identify traffic signs, track vehicles, and interpret lane boundaries. Unlike other sensors, cameras deliver rich contextual detail, making them a critical layer in both driver assistance and fully automated navigation.

In this blog, you’ll get expert insights on why embedded cameras are critical for autonomous mobility, the types used in vehicles, and how they align with different levels of automation.

Importance of Autonomous Mobility Cameras

Every level of autonomous driving depends on perception that can operate in real time under variable conditions. Radar and LiDAR measure distance and velocity, but cameras provide the contextual detail required for decision-making. They enable vehicles to:

- Detect traffic signs and interpret traffic lights

- Recognize pedestrians, cyclists, and animals in complex environments

- Differentiate between lanes, curbs, and varied road surfaces

- Monitor the driver’s state of attention in semi-automated systems

Types of Mobility Cameras and Their Automation Level-Based Roles

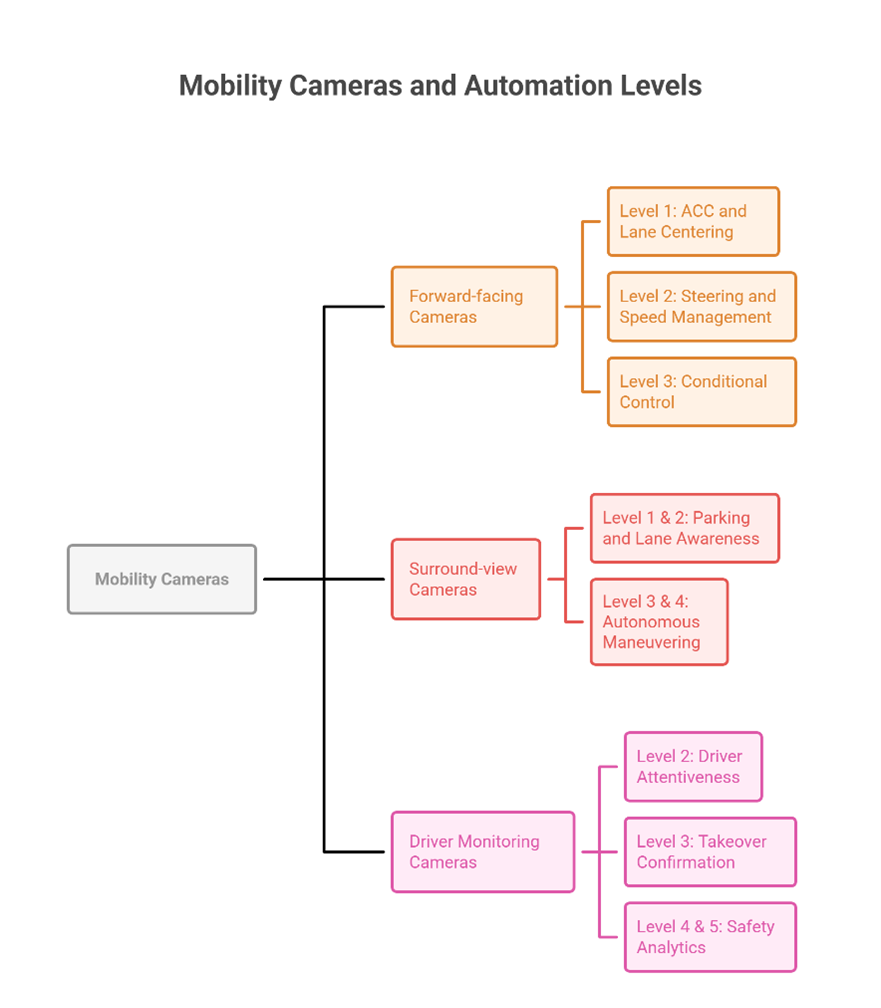

Vehicles use different categories of cameras to understand their surroundings, interpret road scenarios, and supervise driver behavior. As the system progresses from level 0 to level 5 automation, the contribution of each camera type expands, with higher expectations for resolution, reliability, and synchronized perception.

Forward-facing cameras: Long-range vision for guidance and hazard response

Forward-facing cameras: Long-range vision for guidance and hazard response

These cameras read road signs, interpret traffic signals, and track distant hazards. HDR strengthens performance in scenes with bright sun and deep shadow, and LED flicker control stabilizes traffic-light visibility. In level 1 automation, the system handles Adaptive Cruise Control (ACC) or lane centering, and the front-facing camera enables those functions by providing clean, stable long-range frames.

At level 0 and level 1, they support driver-assist functions such as lane departure alerts or steering correction. At level 2, they work with other sensors to manage both steering and speed together. At level 3, higher bandwidth and detail help the system take conditional control inside predefined scenarios.

Surround-view cameras: 360-degree awareness for maneuvering and coverage

Multiple lenses combine into a stitched overhead view, powered by accurate multi-camera synchronization and low-latency transmission. This gives the system visibility around the vehicle for blind-spot detection, parking assistance, and tight-space navigation. The synchronization capability and low-latency output keep each frame aligned as the vehicle moves, supporting early-stage assistance and high-level maneuver planning.

At level 1 and level 2, they support parking and lane-side awareness. At level 3 and level 4, they contribute to fuller autonomous maneuvering inside restricted operational zones.

Driver monitoring cameras: Interior vigilance for attention and fatigue detection

These cameras track gaze direction, eyelid movement, and head orientation. Near-infrared capability supports day-night operation, and global shutter sensors prevent distortion when the head moves quickly, giving downstream systems clean data for supervision and fall-back. This visual stability becomes critical whenever the system must judge driver readiness.

They play a key role at level 2, where the driver must stay attentive during partial automation. At level 3, they confirm a prompt takeover when the system requests. At levels 4 and 5, they remain relevant in shared vehicles or fleet use for safety analytics, even when driver intervention is required.

e-con Systems Offers high performance Autonomous Mobility Cameras

e-con Systems has been designing, developing, and manufacturing OEM cameras since 2003. Our mobility cameras feature high resolution for detailed object recognition, HDR for extreme contrast scenes, and LED flicker control for reliable performance in urban lighting.

With multi-camera synchronization, autonomous systems can perform consistently across wide fields of view. They also come with rugged housings and automotive-grade connectors to ensure durability in vibration, dust, and rain.

In short, e-con Systems helps autonomous mobility developers shorten design cycles and scale their platforms with confidence.

See our full range of autonomous mobility camera solutions.

Use our Camera Selector to browse our complete portfolio.

Looking for the best-fit camera for your autonomous mobility system? Please get in touch with us at camerasolutions@e-consystems.com.

Frequently Asked Questions

- What role do cameras play at Level 0 and Level 1 automation?

At these levels, cameras function as supportive aids rather than full control systems. They assist with tasks like rear visibility and lane departure alerts. The driver remains responsible for every decision while cameras provide situational cues.

- Why is multi-camera synchronization important in autonomous vehicles?

Vehicles deploy several cameras to cover a complete field of view. If the frames from each lens are captured at different times, stitched images or fused data become misaligned. Synchronization ensures the perception system processes consistent inputs across all viewpoints.

- How do camera requirements change from Level 2 to Level 3 automation?

Level 2 depends on cameras to manage steering and speed while the driver supervises. Level 3 shifts toward conditional independence, requiring higher resolution and faster data flow. This progression increases the demand on camera performance and system integration.

- What challenges do cameras face in high automation (Level 4 and Level 5)?

In these stages, cameras replace human vision as the primary source of perception. They must capture clear visuals in rain, fog, low light, and glare. Redundancy and advanced imaging features are required because no driver is present to intervene.

- How does e-con Systems support building autonomous mobility platforms?

e-con Systems provides embedded cameras equipped with HDR, LED flicker control, rugged housings, and synchronized multi-camera capability. Each camera is designed to function in harsh outdoor conditions with dust, vibration, and varying light. Integration with leading processors reduces design complexity and supports faster deployment.

Suresh Madhu is the product marketing manager with 16+ years of experience in embedded product design, technical architecture, SOM product design, camera solutions, and product development. He has played an integral part in helping many customers build their products by integrating the right vision technology into them.